There are several ways in which schools can disadvantage boys’ education. One is by adopting feminised pedagogy, designed for girls but unappealing to boys. I discussed an example of this here. Another way in which boys’ education may be adversely affected is through low expectations and negative stereotyping. I give an example of this below. But a third way, upon which I wish to focus primarily in this article, is by teachers assessing boys more harshly than they assess girls. The evidence seems to be mounting that unfair teacher assessment, to the detriment of boys, is widespread. I review several sources testifying to this phenomenon. There is, of course, a fourth mechanism of male disadvantage: society’s tendency to focus only upon those areas where girls are doing less well, and ignoring areas where boys do less well. Athena SWAN is an example of this.

I take the opportunity to review two recent publications: a comprehensive meta-analysis of teacher assessment data by Voyer and Voyer, from the Psychological Bulletin (2014), and the most recent OECD / PISA report (published 2015). I will have more to say about the latter in a follow-up post.

This post cannot pretend to be a thorough review of all the literature. I have included only those sources with which I am familiar. There may well be other sources with contradictory findings which a comprehensive review would identify and critique.

Low Expectations and Negative Stereotyping

Source: Gender Expectations and Stereotype Threat, a study carried out in 2010 but published in October 2013 (one wonders what delayed publication), by Bonnie Hartley and Robbie Sutton, University of Kent, in Child Development, Volume 84, Issue 5, pages 1716–1733 and also summarised here.

This study presented 238 pupils aged four to 10 with a series of statements such as “this child is really clever” and “this child always finishes their work” and asked them to link the words to pictures of boys or girls. The study found that girls think that girls are cleverer than boys. This will come as no surprise, I expect. Girls at all ages said girls were cleverer.

But boys aged between four and seven were evenly divided as to which gender was cleverer.

And by the time boys reached seven or eight, they agreed with their female peers that girls were more likely to be cleverer and more successful.

We are constantly being told that girls lack self confidence. There is no evidence of it from this study. But this study does raise the question as to why the experience of school seems so decisively to dent boys’ self confidence. Researchers also found that the children believed adults shared the same opinion as them, meaning that boys felt they were not expected by their parents and teachers to do as well as girls and lost their motivation or confidence as a result

In a separate experiment in the same study, 140 of the children were divided into two groups. The academics told the first group that boys do not perform as well as girls. The second group were not told this. All the pupils were tested in maths, reading and writing. The academics found the boys in the first group performed “significantly worse” than boys in the second group, while girls’ performance was similar in both groups. This appears to confirm that low expectations are a self-fulfilling prophecy.

The paper argues that teachers have lower expectations of boys than of girls and this belief fulfils itself throughout primary and secondary school. Girls’ performance at school may be boosted by what they perceive to be their teachers’ belief that they will achieve higher results and be more conscientious than boys. Boys may underachieve because they pick up on teachers’ assumptions that they will obtain lower results than girls and have less drive.

Pupils’ Perceptions of Teacher Biases

Source: Students’ Perceptions of Teacher Biases: Experimental Economics in Schools by Amine Ouazad and Lionel Page, January 2012 (Centre for the Economics of Education, London School of Economics).

This study presents the results of an experiment that involved about 1,200 grade 8 pupils (aged 13) across 29 schools in London, Manchester, and Liverpool. Students were handed a sum of £4 which they could either keep or allocate part of the sum to bet on their own performance at a test where grading was partly discretionary. In a random half of the classrooms, grading was done anonymously by an external examiner. In the other random half, grading was done non-anonymously by the teacher. Thus differences in students’ betting behaviour across the anonymous and non-anonymous classrooms identified the effect of grading conditions on students’ beliefs.

The study showed that pupils’ perceptions were on average in line with teachers’ actual behaviour. The experiment involved students and teachers of different ethnicity, gender, and socio-economic status. But gender was the only significant factor in the results. Male students tended to bet less when assessed by a female teacher than by an external examiner or by a male teacher. This was consistent with female teachers’ grading practices; female teachers gave lower grades to male students. Female students bet more when assessed by a male teacher than when assessed by an external examiner or a female teacher.

The study provided no evidence that students’ beliefs depended on ethnicity or socio-economic status.

But the gender results showed that students’ beliefs tend to increase the gender gap in investment and effort. A male teacher increases the effort and investment of a female student. A female teacher tends to lower the effort and investment of male students. These observations are rather unfortunate in view of the preponderance of women teachers in our schools.

Key Stage 2 SATS

In an earlier post I have already described how signs of teacher bias against boys in Key Stage 2 SATS can be discerned (UK data). This is done by calculating the difference between teacher assessments and test results, and comparing this difference for boys and girls. Where there is a gender effect it is always in favour of the girls. This applies in both maths and reading, but more so in the latter.

GCSE versus O Levels

In the same blog post I also observed that the gender performance gap for 16 year olds arose just when O Levels were replaced by GCSEs in 1987. O Levels were awarded on exam results alone. GCSEs are partly based on course work, marked by the teachers. Consequently, the introduction of GCSEs provided the opportunity for teacher bias to influence the attainment of pupils for the first time in these awards to 16 year olds. This does not constitute proof of teacher bias, but it does seem to be a remarkable coincidence of timing.

GCSEs versus PISA Tests (2006)

Source: Achievement of 15-year-olds in England: PISA 2006 National Report, by Bradshaw, J., Sturman, L., Vappula, H., Ager, R. and Wheater, R. (2007). (PISA is the OECD “Programme for International Student Assessment”), National Foundation for Educational Research.

Further independent support for the contention that boys’ underperformance at GCSE may be largely a result of the nature of these awards is obtained from the OECD 2006 PISA study. The PISA involved assessment of 15 year olds in reading, mathematics and science via tests developed for the PISA exercise and intended to provide, amongst other things, a standard by which different nations can compare the effectiveness of their education arrangements. The UK component involved 502 schools and over 13,000 pupils.

In the science PISA males significantly outperformed females. The difference in the science scores of females and males was largely attributable to differential performance on the “explaining phenomena scientifically” competency scale. This indicates that males did better than females in such skills as applying their scientific knowledge, identifying or describing scientific phenomena and predicting changes. In contrast with these PISA results, girls get more top grades (A*, A and B) in GCSE science than boys, adding to the suspicion that the GCSE awards may be biased.

In the maths PISA males also did significantly better than females. The difference of 17 scale points between females and males was higher than the OECD average of 11 scale points difference. This was one of the highest differences within the 44 comparison countries with only three countries having a higher figure. The 2006 PISA report itself remarks on the contrast between this and the GCSE results in maths, thus: “It is interesting to compare this pattern of male advantage (i.e., in the PISA tests) with that found in other assessments in England……The GCSE mathematics qualification in 2007 showed no gender difference. 14 per cent of both males and females achieved grade A* or A……It seems then that results from measures that are used regularly in England do not tell the same story about gender differences as the PISA survey.” Again this adds to the suspicion that the GCSE awards are biased.

A Levels versus Standard Attainment Tests (SATs)

Source: Relationships between A level Grades and SAT Scores in a Sample of UK Students by Kirkup, J.Morrison and C.Whetton, National Foundation for Educational Research, paper presented at the Annual Meeting of the American Educational Research Association, New York, March 24-28, 2008.

This research was based on a comparison of A level performance against the results of a Standard Attainment Test (SAT). It involved approximately 8000 students (46% male) in English schools and Further Education colleges, who took the SAT Reasoning Test in autumn 2005 during the final year of their two-year A level courses. For most students this is the academic year in which their 18th birthday occurs. The SAT comprises three main components: Critical Reading, Mathematics and Writing.

Female students had higher total GCSE and A level points scores and achieved significantly higher scores on the SAT Writing component than male students. There was no significant difference in the scores for male and female students on the SAT Reading component. Male students performed significantly better on the SAT Mathematics component and on the SAT as a whole.

That males performed better on the SAT as a whole is remarkable given that the three tests, Critical Reading, Mathematics and Writing, might have been supposed to consist of two playing to female strengths and just one playing to male strengths. The main male strength in traditional UK exams, science, was not represented in the SAT.

Males did better on the SAT than expected based on their A Level attainment. In contrast, female students, some ethnic minorities, students with special educational needs (SEN) and students learning English as an additional language (EAL) appeared to perform less well on the SAT than expected based on GCSE and A Level attainment compared to the default categories (i.e. boys, white students, etc).

Whilst these results do not establish that GCSEs and A Levels are biased, they do demonstrate that female dominance in these UK awards may be more to do with the nature of the award process itself rather than the candidates’ merits. The results seem to imply that this female dominance could be over-turned by an alternative award or examination system. This could have substantial implications for the relative numbers of men and women attending universities in the UK.

Evidence from the USA at Primary Level

Source: Non-Cognitive Skills and the Gender Disparities in Test Scores and Teacher Assessments: Evidence from Primary School by Christopher Cornwell, David Mustard and Jessica Van Parys, (IZA Discussion Paper No. 5973, September 2011, and published in The Journal of Human Resources, Winter 2013, vol. 48, 236-264). A summary can be found here.

This study found that, in every subject at primary school, boys are represented in teacher-specified grade distributions below where their test scores would predict. The Abstract of the paper reads as follows,

Using data from the 1998–99 ECLS-K cohort, we show that the grades awarded by teachers are not aligned with test scores. Girls in every racial category outperform boys on reading tests, while boys score at least as well on math and science tests as girls. However, boys in all racial categories across all subject areas are not represented in grade distributions where their test scores would predict. Boys who perform equally as well as girls on reading, math, and science tests are graded less favourably by their teachers, but this less favourable treatment essentially vanishes when non-cognitive skills are taken into account. For some specifications there is evidence of a grade “bonus” for boys with test scores and behaviour like their girl counterparts.

Cornwell et al attribute the marking-down of boys to the teachers awarding credit for “non-cognitive development”. This is defined as attentiveness, task persistence, eagerness to learn, learning independence, flexibility and organization. To be more blunt, these phrases mean “well behaved”. To be even more blunt, they mean “behave like girls”. Simply put, the gender disparity in this US study is largely due to boys being marked down for not being girls.

In a newspaper article Cornwell commented,

Eliminating the factor of “non-cognitive skills” almost eliminates the estimated gender gap in reading grades. He said he found it “surprising” that although boys out-perform girls on math and science test scores, girls out-perform boys on teacher-assigned grades. In science and general knowledge, as in math skills, the data showed that kindergarten and first grade white boys’ grades “are lower by 0.11 and 0.06 standard deviations, even though their test scores are higher.”

This disparity continues and grows through to the fifth grade, with white boys and girls being graded similarly, “but the disparity between their test performance and teacher assessment grows.” The disparity between the sexes in school achievement also far outstrips the disparity between ethnicities. “From kindergarten to fifth grade,” he found, “the top half of the test-score distribution” among whites is increasingly populated by boys, “while the grade distribution provides no corresponding evidence that boys are out-performing girls”.

An earlier study, Self-discipline gives girls the edge: Gender in self-discipline, grades, and achievement test scores (Journal of Educational Psychology, Vol 98(1), Feb 2006, 198-208) found that throughout elementary, middle, and high school, girls earn higher grades than boys in all major subjects. Girls, however, did not outperform boys on achievement tests and did worse on an IQ test. The report explained this discrepancy on the basis that girls were “more self-disciplined, and this advantage is more relevant to report card grades than achievement or aptitude tests”. The authors did not elucidate the mechanism by which “self-discipline” gave rise to better grades, but the implication appears to be that, as with Cornwell et al, the teachers are awarding credit for “non-cognitive” issues.

Evidence from Northern Ireland

Source: Local newspaper articles by Hilary White, here and here.

In these hard-hitting articles, Hilary White had this to say in respect of a study by the University of Ulster in Northern Ireland,

For decades we’ve listened to teachers and ‘academics’ shedding crocodile tears as they bemoan the terrible ‘failure’ of the boys in their classes falling behind girls. Now a five year University study has shown why. Those same teachers are just deliberately marking boys down – even when they do better in tests and exams than their female counterparts.

A five-year research project, funded by the Departments of Education and Justice in Northern Ireland, found “systemic flaws” in teaching techniques led to teachers discriminating overtly against male students. The shocking survey blows the lid on how since the 1970s, when feminist critics complained that the school system favoured “male thinking,” grades have been decided not by performance and knowledge, but on a teacher’s whim.

Feminists condemned intelligence and knowledge as ‘too masculine’. And they argued successfully that pupils should be marked on ‘emotional intelligence’ (a phrase which means nothing, and so is entirely subjective), and social skills (which means being nice). Facts, dates, rote learning, and maths skills went out of the window and “fair” teaching styles were introduced in which no matter what a pupil’s test results, what the teacher thought of them held sway.

As a result mainly female teachers have been expressing low views of their boys students and favouring girls. They discriminate against blacks and hispanics in the US, while in Ireland the survey found boys from rough areas of Belfast were particularly looked down on, and so marked down. Dr. Ken Harland and Sam McCready from the University of Ulster said that the problem of apparent boys underachievement at school has been clear for “several decades.”

But they said “it was extremely difficult for the research team to find specific strategies addressing boys’ underachievement.” “Although teachers who were interviewed as part of this study recognised the predominance of boys with lower academic achievement, they generally did not take this into account in terms of learning styles or teaching approaches,” Dr Harland said.

Hilary White’s position on gender politics is clear. However….

The Northern Irish study to which Hilary White refers is Taking Boys Seriously – A Longitudinal Study of Adolescent Male School-Life Experiences in Northern Ireland by Ken Harland and Sam McCready of the University of Ulster and the Centre for Young Men’s Studies, published by Northern Ireland Statistics and Research Agency, Department of Justice (Northern Ireland). But I could find no support for White’s commentary in the report itself. There are two possible explanations. One is that White’s review is not a faithful representation of Harland and McCready’s views or findings. The other is that it is a faithful representation of Harland and McCready’s views but that these authors were constrained from expressing such forthright opinions in their report – noting that it is a Department of Justice report. I know not which is true, but I issue a warning about the reliability of the above newspaper quote.

Teachers’ Assessments’ Meta-Analysis – Voyer and Voyer (2014)

This is a major new piece of work which I have reviewed in rather more depth.

Source: Gender Differences in Scholastic Achievement: A Meta-Analysis by Daniel Voyer and Susan Voyer, Psychological Bulletin, 2014, Vol. 140, No. 4, 1174–1204.

In common with the studies discussed above, this paper also notes that a female advantage in teacher-assigned school marks is a common finding in education research, and it extends to all course subjects (language, maths, science). This contrasts with what is found on achievement tests in which males generally do better in science and maths.

Data for achievement tests have included meta-analyses, i.e., compiling the results of many individual studies. Hence these findings may be regarded as statistically reliable. In contrast, Voyer and Voyer noted that research on teacher-assigned school marks had not previously been examined as a whole. This provided the motivation for their work. It is a systematic meta-analysis of gender differences in teacher-assigned school marks.

Some 15,042 published articles (including 753 articles in languages other than English) and 2,265 theses and dissertations were reviewed for possible inclusion in the meta-analysis. Researchers whose articles were retrieved for inclusion in the analysis and who published this work in the last 10 years were contacted by e-mail with a request for similar unpublished research. The final analysis was based on 502 “effect sizes” (i.e., pieces of data indicating the difference in performance of the genders). Effect sizes were derived exclusively from the school marks obtained from teachers’ assessments rather than from tests.

It is worth noting that Voyer and Voyer do not challenge the findings based on testing, that males outperform females in maths and science, quoting several references reporting these findings, e.g., Hyde, Fennema, and Lamon and Else-Quest, Hyde, & Linn and Hedges & Nowell and Nowell and Hedges.

Voyer and Voyer observe that, “Although gender differences follow essentially stereotypical patterns on achievement tests, for whatever reasons, females generally have the advantage on teacher-assigned school marks regardless of the material. This gender difference has been known to exist for many years and has persisted in recent years, for both college students and primary school pupils.” They give a range of references supporting this contention, including Kimball and Pomerantz, Altermatt & Saxon

It is amusing reading the knots these authors tie themselves in trying to rationalise the contradictory results between test scores and teacher assigned marks. The most obvious explanation, that the subjective element of teachers’ marking allows their marking to be contaminated by gender bias, is rarely even raised as a possibility. The partisan nature of the authors’ concerns is evident in many cases. For example, the Abstract of the above Pomerantz, Altermatt & Saxon paper (that’s Eva, Ellen and Jill, incidentally) informs us that,

Girls outperform boys in school…..However, girls are also more vulnerable to internal distress than boys are….Girls outperformed boys across all 4 subjects but were also more prone to internal distress than boys were. Girls doing poorly in school were the most vulnerable to internal distress. However, even girls doing well in school were more vulnerable than boys were.

Darn good job those boys being marked down by their teacher are Neanderthal, thick-skinned clods who couldn’t give a shit, eh? Otherwise someone might be concerned.

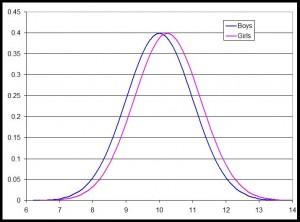

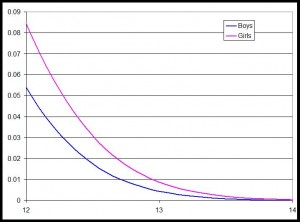

But back to Voyer and Voyer’s analysis. They used a measure of gender difference, d, defined as the mean mark for females minus the mean mark for males divided by the pooled standard deviation (recalling that we are talking about teacher-assigned marks here). In other words, where d is positive it indicates the number of standard deviations by which the average female mark exceeded that for the average boy.

The results showed a significant female advantage in all subjects. The best estimate of d over all data and all subjects was 0.238, with a 95% Confidence Interval of [0.201,0.277], obtained by combining upper and lower bound estimates. The largest effects were observed for language courses (d = 0.374). The magnitude of gender differences was significantly smaller in maths (d = 0.069) and science (d = 0.154). But even in these subjects the average difference is positive, i.e., in favour of females.

Even for the smaller disparities in maths and science, the 95% Confidence Interval did not include zero, and hence females were graded significantly higher even in these subjects (in the statistical sense of “significant”).

Figure 1 illustrates what the average d of 0.238 looks like in terms of the distribution of male and female scores (assuming a normal distribution). Figure 2 shows the same figure, zooming in on the region corresponding to the top 3% of people. This illustrates how substantial a difference a d of 0.238 actually is when the tail of the distribution is considered (noting that the tail may represent those people getting places in the best universities). In fact, if we consider, say, the top 10% of people, with a d of 0.238 these would comprise roughly 60% females and 40% males. I invite you to compare this with the current university gender ratio. (This crude illustration assumes male and female distributions with the same variance, which will not really be true).

Figure 1: Illustration of d = 0.238 for normal distributions with the same variance (arbitrary mean)

Figure 2: As Figure 1 zoomed in to the top 3% of people

The most important finding is therefore that there is a consistent, and statistically significant, female advantage in school marks for all subjects. In contrast, as already noted, meta-analyses of performance on standardized tests have consistently reported gender differences in favour of males in mathematics and science. Voyer and Voyer chose to spin this as follows,

This contrast in findings makes it clear that the generalized nature of the female advantage in school marks contradicts the popular stereotypes that females excel in language whereas males excel in math and science. Yet the fact that females generally perform better than their male counterparts throughout what is essentially mandatory schooling in most countries seems to be a well-kept secret considering how little attention it has received as a global phenomenon. In fact, the popular press and elected representatives still focus on findings that fit stereotypical expectations.

Voyer and Voyer appear to be accepting that teachers’ grades are a reliable guide to ability or performance, and hence that the standardized tests are not. To them, therefore, the issue is “why does society persist in believing that males are better at maths and science when the grading indicates otherwise?” They take a couple of pages over discussing several possible explanations. But they perversely refuse to recognise that there is an elephant in the room.

To recap: marks obtained by testing, which one can take to be objective, universally indicate that males do better in maths and science. Voyer and Voyer do not challenge this and appear to accept this as a fact, citing many references to this effect. In contrast, Voyer and Voyer’s own analysis shows that teachers grade females higher than males in everything, including maths and science. Unlike testing, teachers’ grading is open to subjective influence. The elephant in the room is the subjective influence in teachers’ assessment. Voyer and Voyer play down this factor.

To be fair they do write in the introductory sections, ” Many attempts have been made to explain the apparent contradiction between what is observed on gender differences with achievement tests and with actual school performance…… An in-depth coverage of these factors is beyond the scope of the present article”. Also, in the Discussion they do mention the possibility that, “gender differences in class behaviour could affect teachers’ subjective perceptions of students, which in turn might affect their grades. This subjective component of school marks should not be overlooked as it has been shown to affect teachers’ evaluation of their students, potentially leading to sex-biased treatment and self-fulfilling prophecies.” They cite several references which promote this perspective, including Bennett, Gottesman, Rock, & Cerullo and Jussim, Robustelli, & Cain

These references are of interest in their own right, demonstrating that an awareness of teacher bias against boys has been around in academic circles for more than twenty years at least.

But Voyer and Voyer have a disturbing lack of compassion in interpreting the implications of their finding. They illustrate it thus, “a crude way to interpret this finding is to say that, in a class of 50 female and 50 male students, there could be eight males who are forming the lower tail of the class marks distribution. These males would be likely to slow down the class.” Those males at the bottom of the class will be the ones marked down by the teacher, but which came first, the bad behaviour or the poor assessment? And, moreover, do either behaviour or the teacher’s assessment fairly indicate ability?

The most peculiar spin that Voyer and Voyer put on their findings is in relation to the longstanding nature of the teachers’ assessments in favour of females. They write,

Boy-crisis proponents suggest that males have started lagging behind females in terms of school achievement only recently…..This claim was not supported by the results…..the data in the present sample, ranging in years from 1914 to 2011, suggest that boys have been lagging for a long time and that this is a fairly stable phenomenon. Accordingly, it might be more appropriate to claim that the boy crisis has been a long-standing issue rather than a recent phenomenon.

Are they suggesting that, because what they refer to, disparagingly, as the “boy-crisis”, is of very long standing, it is therefore less important?

And if it is true that girls have always done better, how does this fit with the frequent claim that girls used to be horribly disadvantaged in education?

Or are they saying that females are cleverer than males in everything and always have been and this is being hidden by society because patriarchy? The latter position can be adopted provided that one accepts teachers’ assessments as truly indicative, and ignores the contradictory evidence from testing. This appears to be the direction in which Voyer and Voyer lean. But the presumption that teachers’ assessments are a superior indicator of achievement than testing is, at best, unsupported. In fact it is perverse given the obvious influence of subjective factors in the former.

Finally, in the UK, scholastic awards at age 16 and 18 were based entirely on examination prior to 1987. Elements of course work, and hence of teacher assessment, only entered these awards after 1987, and the gender gap in attainment also occurred, or became more significant, at this time. There is a correlation, if only temporal, between attainment and the involvement of teacher assessment in the awards. Hence, even if a teacher bias in favour of females has been with us forever, it would not have been apparent in the UK award system prior to 1987.

Is the “boy crisis” actually a manifestation of teacher bias in a culture which increasingly labels masculine behaviour as reprehensible?

The 2012 OECD PISA Report

I intend to write a more complete account of the latest PISA report in a follow-up post, there is such a lot of interesting material in it. Here I just confine myself to a few observations pertinent to the issue of teacher bias in marking.

Teacher Bias from the 2012 PISA

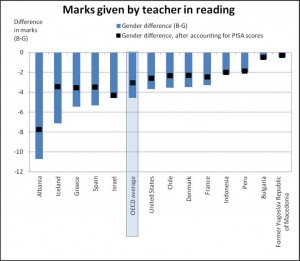

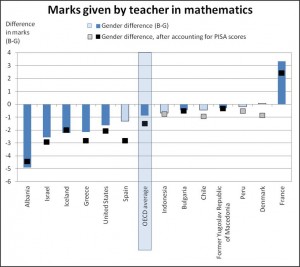

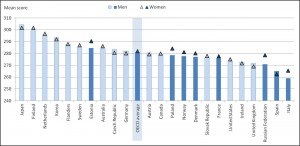

The following are extracts from the 2012 PISA report. They are not contiguous. I have lifted quotes from different Sections. The Figures referred to are given below (Figures 3a and 3b).

Many teachers reward organisational skills, good behaviour and compliance with their instructions by giving higher marks to students who demonstrate these qualities. As Figures 3a and 3b suggest, teachers and school personnel may sanction boys’ comparative lack of self-regulation by giving them lower marks and requiring them to repeat grades. When comparing students who perform equally well in reading, mathematics and science, boys were more likely than girls to have repeated at least one grade before the age of 15 and to report that they had received lower marks in both language-of-instruction classes and mathematics. But it is unclear how “punishing” boys with lower grades or requiring them to repeat grades for misbehaviour will help them; in fact, these sanctions may further alienate them from school.

Figure 3a: Gender difference (b-g) in marks awarded by their teacher (reading)

Figure 3b: Gender difference (b-g) in marks awarded by their teacher (maths)

An analysis of students’ marks in reading and mathematics reveals that while teachers generally reward girls with higher marks in both mathematics and language-of-instruction courses, after accounting for their PISA performance in these subjects, girls’ performance advantage is wider in language-of-instruction than in mathematics. This suggests both that girls may enjoy better marks in all subjects because of their better classroom discipline and better self-regulation, but also that teachers hold stereotypical ideas about boys’ and girls’ academic strengths and weaknesses. Girls receive much higher-than-expected marks in language-of-instruction courses because teachers see girls as being particularly good in such subjects. Teachers may perceive boys as being particularly good in mathematics; but because boys have less ability to self-regulate, their behaviour in class may undermine their academic performance, making this hypothesis difficult to test.

The last sentence means that they are hypothesising that teachers would be inclined to mark boys up at maths, due to cultural gender bias that boys are expected to be better at maths, but this cannot be seen in the data because, in fact, teachers mark boys down in maths, as in everything else! Hilarious. Let me paraphrase: “We claim that teachers would mark boys up in maths but this cannot be seen in the data because this tendency is masked by the fact that, in practice, they mark them down”. Excellent. Love it.

The report also shows that teachers generally award girls higher marks than boys, given what would be expected after considering their performance in PISA. This practice is particularly apparent in language-of-instruction courses. Girls’ better marks may reflect the fact that they tend to be “better students” than boys: they tend to do what is required and expected of them, thanks to better self-regulation skills, and they are more driven to excel in school. In addition, girls appear to be stronger in displaying the knowledge they have acquired (i.e. solving an algebraic equation) than in problem solving, the latter of which is a central component of the PISA test. But this report reveals that the gender gap observed in both school marks and PISA scores is not the same in both language-of-instruction classes and mathematics. The fact that it is much wider in the language-of-instruction courses suggests that teachers may harbour conscious or unconscious stereotyped notions about girls’ and boys’ strengths and weaknesses in school subjects, and, through the marks they give, reinforce those notions among their students and their students’ families.

Adult versus 15 Year Old Literacy and Numeracy: PISA v PIAAC

And finally, what for me was a complete surprise. The PISA relates to tests on 15 year olds. But the PISA report compares its findings with those of a separate OECD study which addresses the literacy and numeracy of adults, specifically in the age range 16 to 29. This is the Programme for the International Assessment of Adults Competencies (PIAAC). The PIAAC “assesses the proficiency of adults in literacy, numeracy and problem solving in technology-rich environments. These skills are “key information-processing competencies” that are relevant to adults in many social contexts and work situations, and necessary for fully integrating and participating in the labour market, education and training, and social and civic life.”

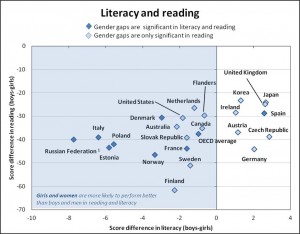

The result is truly remarkable because the gender gap in reading, which is emphatic across all countries in the PISA at age 15 (and indeed from all school-age studies) disappears for adult literacy! This is illustrated by Figure 4, which shows that any gender gap in adult literacy is negligible across all countries plotted, including the UK.

Figure 5 compares the PISA gender gap in reading at age 15 (negative means girls do better) with the PIAAC gender gap at age 16-29 in literacy. The highly emphatic gap in favour of 15 year old girls is dramatically reduced across all countries plotted, and for many countries actually reverses, including the UK. The data indicate that, as boys and girls leave compulsory schooling and enter either further education and training or work, the gap in literacy proficiency narrows considerably. Indeed, if anything, young men tend to outperform young women.

While teenage boys may be less likely than teenage girls to engage in activities that allow them to practice and build their literacy skills, as they mature they are required to read and write in their work as much as, if not more than, women are. Thus they are often able to catch up with, if not surpass, women’s skills in literacy. Surprisingly the PISA presents data showing that men also do more reading and writing at home than women, contrary to popular belief, though it may tend to be professional journals or publications, manuals or reference materials, diagrams, maps or schematics.

What are we to make of the dramatic change in male proficiency in reading/literacy. Of course, the two measures are not the same, and that will be part of the difference. But does this observation add to the suspicion that males are unfairly treated in school, either by unfair marking or by a feminised pedagogy? Or does it mean, perhaps, that men become engaged in reading and writing only when there is something to be gained from it, such as a requirement in paid employment? These two explanations are not necessarily as distinct as they appear at first sight. There is a third possibility. Perhaps males simply mature later. Maybe girls reach their reading peak at 15, whereas males do not do so until some time later. Who knows, but the fact that male disadvantage in literacy does not persist beyond school age is a significant fact of which I was not previously aware.

Figure 4: Gender difference (b-g) in literacy amongst 16-29 year olds determined from the 2012 Survey of Adult Skills (PIAAC)

Figure 5 Gender differences (b-g) in reading from the 2012 PISA for 15 year olds (y axis) versus gender differences (b-g) in literacy from the 2012 PIAAC for 16-29 year olds (x axis)

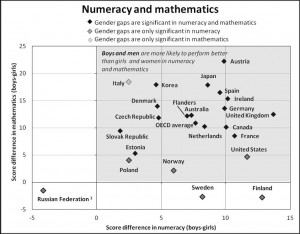

In stark contrast to the results of the PIAAC for literacy, the results for numeracy (Figure 6) indicate that the male dominance in maths at age 15 (from the PISA) persists largely unchanged, on average, into adulthood. The PIAAC still shows a similar level of male dominance in numeracy at age 16-29 for most countries, including the UK. It would appear, then, that adults tend to have male dominance in numeracy and broad equality in literacy.

Figure 6: Gender differences (b-g) in maths from the 2012 PISA for 15 year olds (y axis) versus gender differences (b-g) in numeracy from the 2012 PIAAC for 16-29 year olds (x axis)